|

Junli Ren*, Junfeng Long*, Tao Huang, Huayi Wang, Zirui Wang, Feiyu Jia, Wentao Zhang, Jingbo Wang†, Ping Luo†, Jiangmiao Pang† Preprint [Project Page] [Paper] [Video] [Code] [BibTeX] Humanoid Goalkeeper learns a single end-to-end RL policy, executing agile, human-like motions to intercept flying balls, as well as performing tasks such as escaping a ball using jump and squat motions. |

|

Tao Huang, Huayi Wang, Junli Ren, Kangning Yin, Zirui Wang, Xiao Chen, Feiyu Jia, Wentao Zhang, Junfeng Long, Jingbo Wang†, Jiangmiao Pang† International Conference on Robotics and Automation (ICRA), 2026 [Project Page] [Paper] [Video] [Code] [BibTeX] we introduce AdaMimic, a novel motion tracking algorithm that enables adaptable humanoid control from a single reference motion. |

|

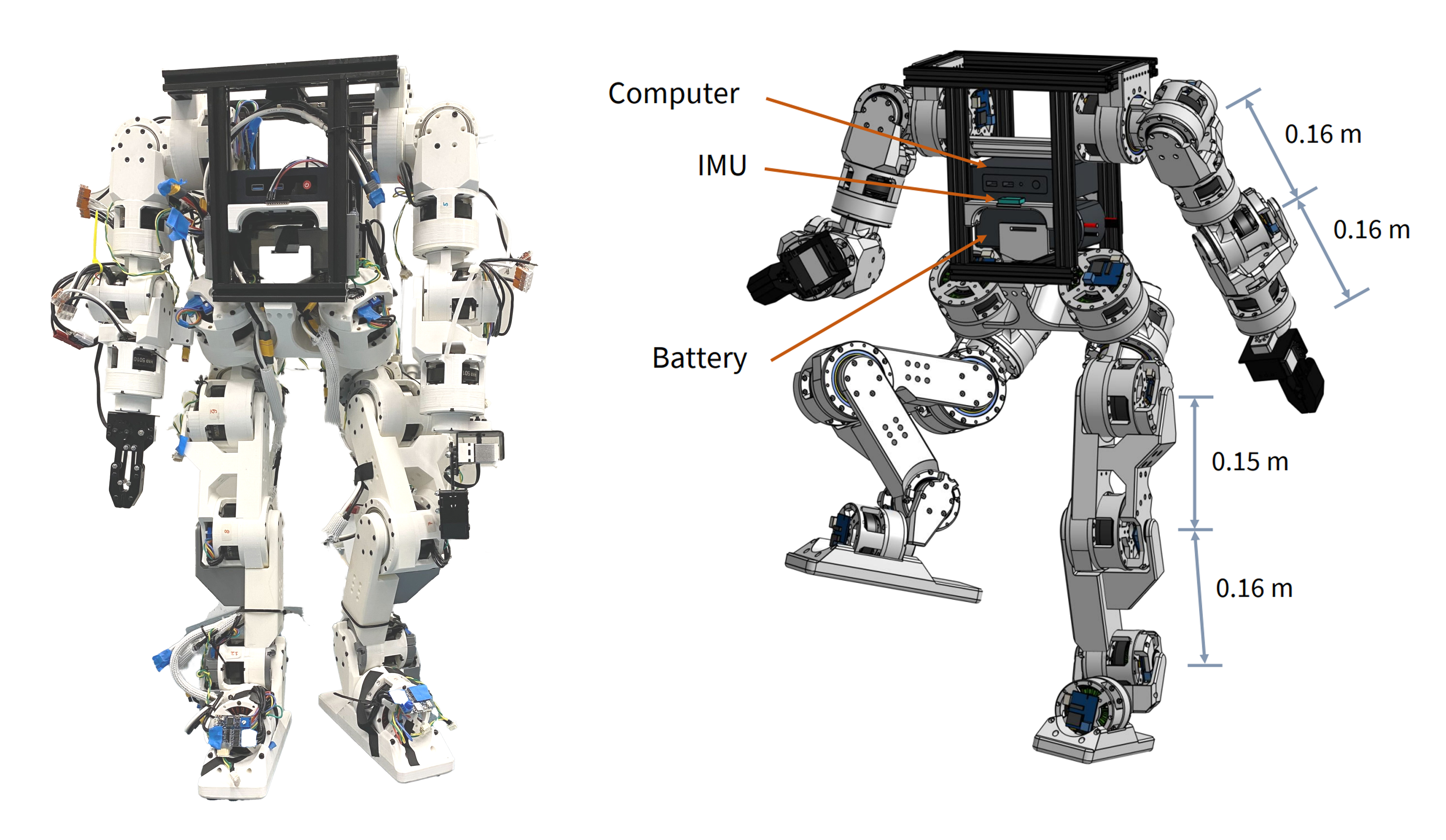

Yufeng Chi, Qiayuan Liao, Junfeng Long, Xiaoyu Huang, Sophia Shao, Borivoje Nikolic, Zhongyu Li, Koushil Sreenath Robotics: Science and Systems (RSS), 2025 [Project Page] [Paper] [Code] [Documentation] [BibTeX] We build a fully open-source, accessible and customizable humanoid robot with no more than $5000 cost! |

|

Junli Ren, Tao Huang, Huayi Wang, Zirui Wang, Qingwei Ben, Junfeng Long, Yanchao Yang Jiangmiao Pang†, Ping Luo† International Conference on Robotics and Automation (ICRA), 2026 [Project Page] [Paper] [Video] [BibTeX] We propose VB-Com, a composite framework that enables humanoid robots to determine when to rely on the vision policy and when to switch to the blind policy under perceptual deficiency. |

|

Junfeng Long*, Junli Ren*, Moji Shi*, Zirui Wang, Tao Huang, Ping Luo, Jiangmiao Pang† International Conference on Robotics and Automation (ICRA), 2025 [Project Page] [Paper] [Code] [BibTeX] We propose the Perceptive Intenal Model (PIM), a method to estimate environmental disturbances with perceptive information, enabling agile and robust locomotion for various humanoid robots on various terrains. |

|

Junfeng Long*, Wenye Yu*, Quanyi Li*, Zirui Wang, Dahua Lin, Jiangmiao Pang† Conference on Robot Learning (CoRL), 2024 Best Poster Award of LocoLearn Workshop [Project Page] [Paper] [Code] [BibTeX] We present the H-infinity Locomotion Control, an adversarial framework improving the control policy's ability to resist external disturbances with H-infinity performance guarantee. |

|

Junli Ren*, Yikai Liu*, Yingru Dai, Junfeng Long, Guijin Wang† Conference on Robot Learning (CoRL), 2024 [Project Page] [Paper] [Code] [BibTeX] We propose TOP-Nav, a novel legged navigation framework that integrates a comprehensive path planner with Terrain awareness, Obstacle avoidance and close-loop Proprioception. |

|

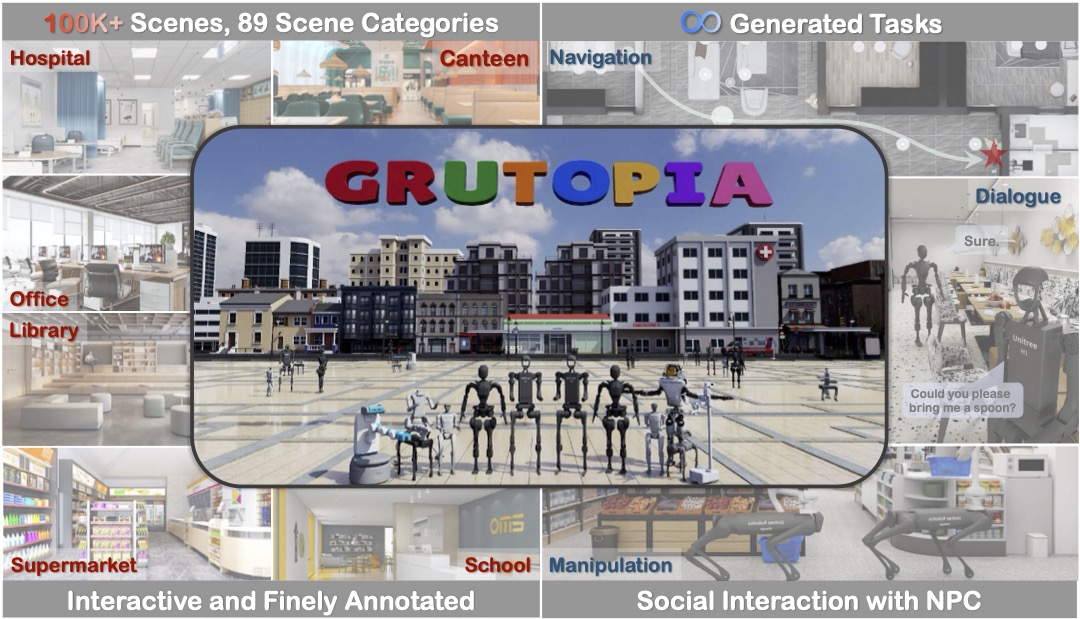

Hanqing Wang*, Jiahe Chen*, Wensi Huang*, Qingwei Ben*, Tai Wang*, Boyu Mi*, Tao Huang, Siheng Zhao, Yilun Chen, Sizhe Yang, Peizhou Cao, Wenye Yu, Zichao Ye, Jialun Li, Junfeng Long, Zirui Wang, Huiling Wang, Ying Zhao, Zhongying Tu, Yu Qiao, Dahua Lin, Jiangmiao Pang† Under Review, 2024 [Project Page] [Paper] [Code] [BibTeX] We proposed GRUtopia, the first simulated interactive 3D society designed for various robots. It features (a)GRScenes, a dataset with 100k interactive and finely annotated scenes. (b) GRResidents, a LLM driven NPC system. (c) GRBench, a benchmark posing moderately challenging tasks. |

|

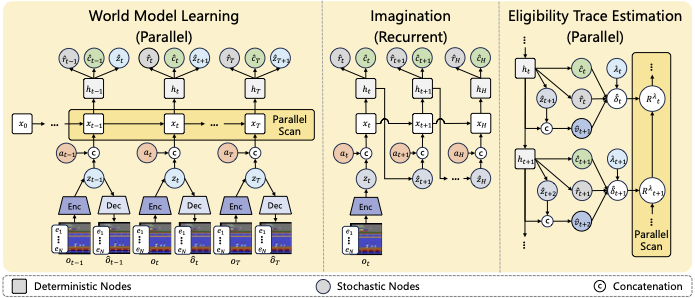

Zirui Wang, Yue Deng, Junfeng Long, Yin Zhang† Annual Conference on Neural Information Processing Systems (NeurIPS), 2024 [Paper] [Code] [BibTeX] We propose the Parallelized Model-based Reinforcement Learning (PaMoRL) framework. PaMoRL introduces two novel techniques: the Parallel World Model (PWM) and the Parallelized Eligibility Trace Estimation (PETE) to parallelize both model and policy learning stages of current MBRL methods over the sequence length. |

|

Junfeng Long*, Zirui Wang*, Quanyi Li, Jiawei Gao, Liu Cao, Jiangmiao Pang† International Conference on Learning Representations (ICLR), 2024 [Project Page] [Paper] [Code] [BibTeX] We present the Hybrid Internal Model, a method enabling the control policy to estimate environmental disturbances by only explicitly estimating velocity and implicitly simulating the system's response. |

|

Haoning Chen, Junfeng Long, Shuai Ma, Mingjian Tang, Youlong Wu (α-β order) IEEE Transactions on Communications (TCOM), 2023 [Paper] [BibTeX] We propose a coded scheme to reduce the communication latency by exploiting computation and communication capabilities of all nodes and creating coded multicast opportunities.More importantly, we prove that the proposed scheme is always optimal, i.e., achieving the minimum communication latency, for arbitrary computing and storage abilities at the master. |

|

|

|

Best Poster Award of LocoLearn Workshop at CoRL 2024

|

|

Updated at Oct 2025.

|

|

|